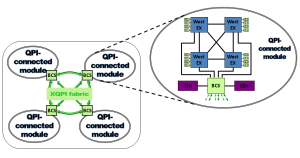

In Bull’s BCS Architecture – Deep Dive – Part 1 I have listed BCS’s two key functionalities: CPU caching and the resilient eXternal Node-Controller fabric.

Now let’s deep dive in to these two key functionalities. Bear with it is quite technical.

Enhanced system performance with CPU Caching

CPU caching provides significant benefits for system performance:

- Minimizes inter-processor coherency communication and reduces latency to local memory. Processors in each 4-socket module have access to the smart CPU cache state stored in the eXternal Node Controller, thus eliminating the overhead requesting and receiving updates from all other processors.

- Dynamic routing of traffic.

When an inter-node-controller link is overused, Bull’s dynamic routing design avoids performance bottleneck by routing traffic through the least-used path. The system uses all available lanes and maintains full bandwidth.

With the Bull BCS architecture, through CPU caching and coherency snoop responses consume only 5 to 10% of the Intel QPI bandwidth and that of the switch fabric. Bull implementation provides local memory access latency comparable to regular 4-socket systems and 44% lower latency compared to 8-socket ‘gluesless’ systems.

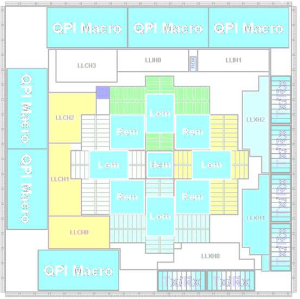

Via the eXtended QPI (XQPI) network a Bull 4-socket module communicates with the other 3x modules as it was a single 16-socket system. Therefore all accesses to local memory have the bandwidth and latency of a regular 4-socket system. Actually each BCS has an embedded directory of 144 SRAM’s of 20 Mb each for a total memory of 72 MB.

Adding to that, the BCS provides 2x MORE eXtended QPI links to interconnect additional 4-socket modules where a 8-socket ‘glueless’ system only offers 4 Intel QPI links. those links are utilized more efficiently as well. By recording when a cache in a remote 4-socket module has a copy of a memory line, the BCS eXternal Node-Controller can respond on behalf of all remote caches to each source snoop. This removes snoop traffic from consuming bandwidth AND reduces memory latency.

Enhanced reliability with Resilient System Fabric

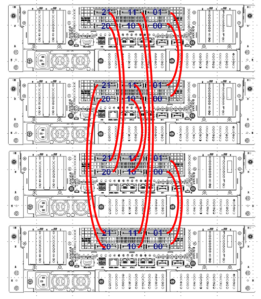

Bull BCS Architecture extends the advanced reliability of the Intel Xeon processors E7-4800 series with a resilient eXtended-QPI (X-QPI) fabric. The BCS X-QPI fabric enables:

- No more hops to reach the information inside any of the other processor caches.

- Redundant data paths. Should a failure of a X-QPI link occur, automatically a redundant X-QPI link takes over.

- Rapid recovery with an improved error logging and diagnostics information.

What about RAS features?

Bull designed the BCS with RAS features (Reliability, Availability and Serviceability) consistent with Intel’s QPI RAS features.

The point-to-point links – that you find in QPI, Scalable Memory Interconnect (SMI) and BCS fabric – that connect the chips in the bullion system have many RAS features in common including:

- Cyclic Redundancy Checksum (CRC)

- Link Level Retry (LLR)

- Link Width Reduction (LWR)

- Link Retrain

All the link resiliency features above apply to both Intel QPI/SMI and the X-QPI fabric (BCS). They are transparent to the hypervisor. The system remains operational.

In part 3 I will write about how Bull improves memory reliability by forwarding memory error detections right into the VMware hypervisor to avoid purple screen of death. This is not science fiction! It is available in a shop near you 🙂

Source: Bull, Intel

Pingback: Bull’s BCS Architecture – Deep Dive – Part 3 « DeinosCloud

Pingback: Bull’s BCS Architecture – Deep Dive – Part 4 « DeinosCloud

This architecture is awesome. However, it seems the communication between modules is still expensive in terms of time in order to achieve cache coherency due to the QPI source broadcast snoopy protocol. I have the experience of gaining less speed-up of running a parallel JAVA program with 60 cores comparing to running it with 40 cores. Is there any way to overcome this bottleneck?

Thanks for your comment.

You’re correct! Traversing nodes for cache coherency is expensive. If your application is that latency sensitive better to run it in the boundary of a NUMA node (1 proc 10 cores xx GB of memory) on a single node (no BCS).

Not sure if your JAVA app can cope with that anyway…

Anyway latency is always higher in a tightly coupled environment such the bullion…