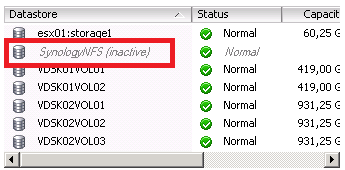

Here is the issue, this small Synology NAS rebooted unexpectedly due to a power failure of the circuit A. Since then my ESX4 hosts have marked the datatstore as inactive. This is how it looks like from a host perspective

From the COS I did a cat /var/log/message | grep nfs

esx01 kernel: [8014830.569310] nfsd: last server has exited

esx01 kernel: [8014830.575333] nfsd: unexporting all filesystems

esx01 kernel: [8014830.677055] NFSD: Using /var/lib/nfs/v4recovery as the NFSv4 state recovery directory

In ESX3.5 it could be an hassle to troubleshoot this kind of issue. Hopefully in ESX(i)4.x it’s much easier. In my case, the setup was OK as it’s used to work fine until the power outage brought down unexpectedly the small NAS device on my network.

First I checked that the NAS is up and running with the NFS server service started. That was OK.

Next I checked that my host still sees the NAS device. For that I use the VMKPING command: vmkping < NAS_IP_Address > and that was also OK, I could ‘ping’ the NAS device from the host’s dedicated vmkernel portgroup.

Next on the host I wanted to make sure the NAS device was listening for NFS mount request and several other settings. For that I started portmap service on the host: service portmap start then I run the command: rpcinfo -p < NAS_IP_Address > and the output was:

program vers proto port

100000 2 tcp 111 portmapper

100000 2 udp 111 portmapper

100003 2 udp 2049 nfs

100003 3 udp 2049 nfs -> FYI ESXi4.x doesn’t use UDP to connect to an NFS share.

100021 1 udp 39704 nlockmgr

100021 3 udp 39704 nlockmgr

100021 4 udp 39704 nlockmgr

100021 1 tcp 45784 nlockmgr

100021 3 tcp 45784 nlockmgr

100021 4 tcp 45784 nlockmgr

100003 2 tcp 2049 nfs

100003 3 tcp 2049 nfs -> The NAS runs NFSv3 on TCP 2049. Just what ESXi4.x requires.

100024 1 udp 57176 status

100024 1 tcp 49627 status

100005 1 udp 892 mountd

100005 1 tcp 892 mountd -> Required to mount the NFS share

100005 2 udp 892 mountd

100005 2 tcp 892 mountd

100005 3 udp 892 mountd

100005 3 tcp 892 mountd

I’m now sure that there is nothing that can prevent me to mount the NFS share… By the way, let’s stop the portmap service as we don’t need it: service portmap stop

I’m good to check the mount with the following command: esxcfg-nas -l The output left me somehow baffled, check it out below…

SynologyNFS is /volume1/Backups from 192.168.0.2 mounted <- Do I read correctly, it says mounted!?

Yes indeed although it is mounted, the connection is NOT up. You can double check this in the /vmfs/volume folder, see below the picture. The datastore is marked in red.

I could not afford a reboot of the host and I had to get this NFS share back up before the backups kick in tonight… I’m dead meat I thought for a second 😦

Googling for the error messages lead to nowhere. This is an ESXi4.x and not a regular Linux server/client setup.

Also something that is not clear for everybody, the VMKERNEL does the mount and NOT the COS or the BusyBox in the case of an ESXi.

Yes I know if you would have to mount the share WITHIN the COS, then you would have to start portmap, nfs, open the firewall for nfs client traffic, check the /etc/exports, etc… But again in my case, I use a vmkernel portgroup, not the COS!

Anyway let’s come back to my issue here, that is, I can’t access the NFS share although it is mounted by the vmkernel… I left Google for VMware web site and I found out a VMware KB that was referring to my symptoms with a simple two steps fix!

- Unmount the NFS datastore: esxcfg-nas -d < NAS_IP_Address >

- Mount the NFS datastore: esxcfg-nas -a -o < NFS_IP_Address > -s < Share_mount_point_on_the_NFS > < DatastoreName >

Easy as 1, 2, 3, I was back in business and a few second later the datastore showed up as active again in the vCenter Client, I’m a happy man 🙂

I love VMware KB… -:)

Google + VMware KB saves your life man…

Great article Didier,

@+

Vladan

Thx Vladan, much appreciated!

Cheers,

Didier

Pingback: Tweets that mention NFS Datastore Inactive In ESX4 After Unexpected Reboot Of The NAS « DeinosCloud -- Topsy.com

I’m ok to calling that a temporary workaround but not a fix by the way…

Well it fixed my problem, that is re-activate my datastore, but true that it doesn’t fix the main issue that is the NFS datastore becomes and stays inactive when the NFS server is disconnected unexpectedly and eventually comes back online 😦

Cheers,

Didier

I found that I had some flaky connectivity on one of the NIC ports that was part of a trunked port group where I have designated a vSwith for NFS traffic only. Under Configuration | Networking | vSwitch Properties | Edit Settings, and then under the NIC Teaming tab, I moved the NIC with intermittent issues to the “Unused Adapters” section, and my NFS Datastores became active again. Next step is troubleshooting my flaky connection on the now isolated and unused port.

Cheers,

-Kirk

Hi Kirk,

Your trunk uses load balancing policy set to “route based on originating virtual port ID” or “route based on IP hash” ?

Also, do you use active/active or active/standby adapters ?

Thx,

Load Balancing based on IP Hash. All of the adapters are Active Adapters. I guess I should list some drawbacks and benefits to this setup.

Drawbacks:

1. Connecting a network cable not part of the trunked, port group to a NIC port that is part of the port group on the ESX host, will kill all connectivity.

2. Should a port have flaky connectivity it can adversely affect all traffic on that port group.

Benefits:

1. Greater aggregate bandwidth

2. Redundancy for port or network failure. (has to be complete failure, otherwise see #2 in Drawbacks above)

3. Redundancy for NIC card failure, as we port group NIC ports from multiple NIC cards.

4. Separate vSwitch for NFS traffic lets me separate NFS traffic from all other network traffic.

5. Furthermore, I have setup a non-routable VLAN for this traffic between the ESX hosts and storage thus limiting broadcast and non storage related traffic.

Disclaimer:

I, by no means, claim to be an expert but, I do however claim to be a continuous student of virtualization. I am not easily offended so feel free to make suggestions and recommendations. I find great benefit in sharing information right or wrong, and learning from both.

Cheers,

-Kirk

Hi Kirk,

-port aggregation/trunking of 8*1Gb gives 1Gb maximum of bandwidth per TCP session and not 8Gb,

-ESXi uses a single TCP session per NFS datastore.

Thus unless you have multiple NFS datastores, your A/A trunk is useless bandwidth wise but not for fault tolerant.

Trunking requires configuration at the switch, especially for ESXi that doesn’t speak LACP. Static LACP (no BDUs exchanged) to be set on the switch.

Or a more conventional approach, Active/Standby design with default load balancing policy.

I recommend you this article, one of the best ever, regarding designing VMware and NFS arrays:

http://virtualgeek.typepad.com/virtual_geek/2009/06/a-multivendor-post-to-help-our-mutual-nfs-customers-using-vmware.html

Thank you! You are a life saver! I had this problem recently and recycling maintenance mode fixed the issue but this time it didn’t work. Also, un-mounting of the NFS storage wasn’t working from the vSphere so I didn’t know what to do.

I did a re-mount through ssh and I’m back in service!