And tutti quanti because this time I wanted to deep dive a bunch of storage acronyms, concepts and terminology we all have read at some point in VMware documentation, white papers and slides but also in storage vendors’ publications. Things like PSA, MRU, Active/Active, Proxying IO’s, and many more…

I went through many blog posts and I have read many documentation files, white papers, slides, etc… Some are boring, but hopefully the majority are just awesome, with detailed information, animations, graphics, diagrams, and valuable tips.

I’ve tried here to summarize up all this information in a flat blog post something I could bookmark and every time I need a quick refresh on the technology, I can have a quick peek at a single document.

As the post was getting bigger and bigger I’ve decided to split it in two parts. I hope to be able to publish the second part next week, so stay tuned 🙂

In the mean time I hope you will find the posts useful, so let’s dive…

Note: screenshots were taken from an ESX 4.0.0 build 208167. The output may vary from your ESX version you’re running.

What do the acronyms mean?

- PSA = Pluggable Storage Architecture

- NMP = Native MultiPathing

- MPP = MultiPathing Plugin

- PSP = Path Selection Plugin

- SATP = Storage Array Type Plugin

- ALUA = Asymmetric Logical Unit Access

- MRU = Most Recently Used

- Fixed = Err well this is not an acronym, it just means … fixed!

- RR = Round Robin

- A/P = Active/Passive

- AA/A = Asymmetric Active/Active

- SA/A = Symmetric Active/Active

- …

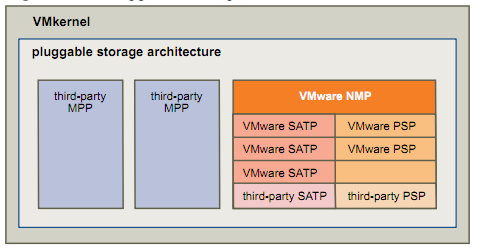

We can see often this picture below in the VMware documentation, can you explain it?

[UPDATE]

In the new vSphere 4.1, the name has changed. Look at the picture below for new name in vSphere 4.1. Note MEM stands for Management Extension Modules aka PSA specific Vendor Plug-Ins.

VMkernel

In VMware parlance, the VMkernel aka VMM aka the monitor is the part of the ESX(i) product that manages interactions with the devices, handles memory allocation, and schedules access to the CPU resources, and other things such the PSA.

What is PSA?

PSA is a special layer of the monitor (VMkernel) used to manage storage multipathing. VMware PSA allows storage vendors, through a modular storage architecture aka PSA, to write plug-ins for their specific capabilities.

Why do we need a PSA?

VMware does a good job talking to the different storage vendor devices but it is missing the last bit for even more integration, high availability and load balancing. Therefore VMware came up with several vStorage APIs that has been published to enable storage partners to integrate their vendor-specific capabilities to provide more optimal performance. The Pluggable Storage Architecture (PSA) was born.

What does PSA do?

It coordinates the simultaneous operation of multiple multipathing plug-ins (MPPs). Yes that means you could have multiple and different plug-ins installed. But so far only EMC came with MPP plug-in called PowerPath/VE. By the way did you know that you can use EMC PowerPath/VE to provide multipathing and load balancing path management to EMC storage devices but also to any other storage vendors.

When coordinating the VMware NMP and any installed third-party MPPs, the PSA performs the following tasks

- Loads and unloads multipathing plug-ins.

- Hides virtual machine specifics from a particular plug-in.

- Routes I/O requests for a specific logical device to the MPP managing that device.

- Handles I/O queuing to the logical devices.

- Implements logical device bandwidth sharing between virtual machines.

- Handles I/O queueing to the physical storage HBAs.

- Handles physical path discovery and removal.

- Provides logical device and physical path I/O statistics.

Thus we have third-party plug-ins called MPP’s, but then what is NMP?

The VMkernel multipathing plug-in that ESX/ESXi provides by default is the VMware Native Multipathing Plug-In (NMP). The NMP is an extensible module that manages sub plug-ins. This VMware’s generic NMP provides a default path selection algorithm based on the array type listed on the VMware storage HCL and it associates a set of physical paths with a specific storage device, or LUN.

NMP is responsible for:

- Managing physical path claiming and unclaiming.

- Registering and de-registering logical devices.

- Associating physical paths with logical devices.

- Processing I/O requests to logical devices, that is:

- Selecting an optimal physical path for the request,

- Performing actions necessary to handle failures and request retries.

- Supporting management tasks such as abort or reset of logical devices.

So MPP and NMP do the same thing basically, one is VMware’s generic multipathing plug-in (NMP) and MPP’s are third-party multipathing plug-ins.

There is only one NMP plug-in and you can have several MPP’s loaded up for several storage vendors.

What are these sub plug-ins as shown on the pictures?

There are two types of NMP/MPP sub plug-ins, Storage Array Type Plug-Ins (SATPs), and Path Selection Plug-Ins (PSPs). SATPs and PSPs can be built-in and provided by VMware’s generic multipathing plug-in (NMP). Also SATPs and PSPs can be provided by third party MPP’s.

What is SATP used for?

The specific details of handling path failover for a given storage array, that is the claiming and unclaiming, are delegated to a Storage Array Type Plug-In (SATP). Within the VMware generic NMP plug-in, VMware offers a SATP for every type of array that VMware supports (See VMware HCL). The following command lists the SATP plug-ins that are currently loaded by the host: esxcli nmp satp list

Each of these VMware generic SATP plug-ins have one or several claiming rules. esxcli nmp satp listrules command will output a long list of claiming rules for each storage vendors VMware is supporting. The screenshot below shows you only the claiming rules for HP storage devices.

If you are familiar with HP storage devices you must have noticed something! Indeed the HP Pxxx family is missing from the list. I’ll come back on this later in part two…

What is PSP used for?

Path Selection Plug-Ins (PSPs) run in conjunction with the VMware NMP and are responsible for choosing a physical path for I/O requests. The VMware NMP assigns a default PSP for every logical device based on the SATP associated with the physical paths for that device. You can override the default PSP.

To get a list of VMware default PSPs, use the following command: esxcli nmp psp list

There are three default PSPs as you can see on the screenshot above: VMW_PSP_MRU, VMW_PSP_RR and VMW_PSP_FIXED

- What is MRU aka Most Recently Used aka VMW_PSP_MRU

This plug-in selects the path the ESX/ESXi host used most recently to access the given device. If this path becomes unavailable, the host switches to an alternative path and continues to use the new path while it is available. MRU is VMwares default policy for A/P storage devices.

Here we have a common misunderstanding. In VMware parlance, an A/P storage device is one that a LUN is own by one single Storage Processor (SP) at a time. Nowadays it is common to have A/A asymmetric storage devices. A/A symmetric storage devices, that is a LUN can be owned by more that one SP at a time, are still reserved for large enterprises.

For example a HP MSA2324fc with two SPs would be categorized as an A/P kind of storage device by VMware although an esxcli nmp satp listrules | grep HP would output the following:

What makes the difference here is this VMW_SATP_ALUA thing. I talk about ALUA in the second part. In the mean time just be aware that this ALUA standard identifies asymmetric storage devices with specific capabilities.

- What is RR aka Round Robin aka VMW_PSP_RR

It uses a path selection algorithm that rotates through all available optimized paths enabling some kind of load balancing across the those paths. To be clear here, RR doesn’t use the non-optimized paths to rotate through. And if RR has to fail over non-optimized paths because of an outage of the optimized paths, RR won’t rotate anymore until either non-optimized paths become optimized (SP to decide to change LUN ownership) or connectivity through failed optimized paths is restored before LUN ownership is changed.

Another common misunderstanding here, whilst Round Robin does a great job out of the box, for enterprise kind of implementations (with VMware Enterprise Plus licenses) it’s just not enough. RR is not an adaptive protocol, it’s pretty static and it’s based on the number of IOPS you send along the path to rotate between all available paths. By default this number is set to 1000.

HP recommends for their EVA line to change that number to 1. The following command helps you do that:

esxcli nmp round robin setconfig –type “iops” –iops 1 –device naa.xxxxxxxxx

On the other hand, EMC would just recommend to install PowerPath/VE and this is definitely a good idea but this is not free! Although you could eventually trade in your regular PowerPath licenses for PP/VE

I do like VMware’s Round Robin multipathing policy, I mean it’s better than nothing, it’s free, it does the job but it has a serious caveat, it does not make any load balancing decisions based on authoritative information. That is just fine for 50% of the virtual environments I guess, but not for Enterprises or public Cloud providers!

Note that Round Robin (RR) and MRU path policies are ALUA-aware (more about ALUA in the second part), meaning that both load balancing policies will first attempt to schedule I/O requests to a LUN through a path that is through the managing controller.

- What is Fixed aka VMW_PSP_FIXED

Uses the designated preferred path, if it has been configured. Otherwise, it uses the first working path discovered at system boot time. If the host cannot use the preferred path, it selects a random alternative available path. The host automatically reverts back to the preferred path as soon as that path becomes available.

The way this policy behaves show clearly that it is not intended for A/P or A/A Asymmetric storage devices because mainly of an issue called path thrashing.

A quick note about path thrashing, some active/passive arrays attempt to look like active/active arrays by passing the ownership of the LUN to the various SPs as I/O arrives. This approach works in a small cluster setup. If many ESX/ESXi systems access the same LUN concurrently through different SPs, the result is path thrashing.

Here is an example of path thrashing. If server A can reach a LUN only through one SP, and server B can reach the same LUN only through a different SP, they both continually cause the ownership of the LUN to move between the two SPs, ping-ponging the ownership of the LUN. Because the system moves the ownership quickly, the storage array cannot process any I/O or just a few. As a result, any servers that depend on the LUN will experience low throughput due to the long time it takes to complete each I/O request.

VMware NMP Flow of I/O

When a virtual machine issues an I/O request to a storage device managed by the NMP, the following process takes place.

- The NMP calls the PSP assigned to this storage device.

- The PSP selects an appropriate physical path on which to issue the I/O.

- If the I/O operation is successful, the NMP reports its completion.

- If the I/O operation reports an error, the NMP calls an appropriate SATP.

- The SATP interprets the I/O command errors and, when appropriate, activates inactive paths.

- The PSP is called to select a new path on which to issue the I/O.

Note that PSP check every 300 seconds if the path is active again. SATP is responsible for doing the failover.

This ends part one of this post. I expect to publish part two next week or so. if you found this post useful do not hesitate to pass it to your friends with the Share this toolbar just below! Thx.

[UPDATE] It took some times but part two is now available here 😉

Pingback: Aloha ALUA | DeinosCloud

Dell EqualLogic also has a MMP, the EqualLogic Multipathing Enhancement Module or EQL MEM, for iSCSI multipathing. Lots of good enhancements & unlike PowerPath/VE it’s free 🙂

Great article by the way. On to part 2.

Hi dboftlp and thx for your comment.

I didn’t know about the Dell plug-in. Good to hear it’s free as well.

Part two is to be found here https://deinoscloud.wordpress.com/2011/04/03/aloha-alua/ as the name doesn’t tell 😉

Pingback: VCAP-DCA Study Notes – 1.3 Complex Multipathing and PSA plugins | www.vExperienced.co.uk

Pingback: Hu Yoshida » Blog Archive » VMware Alternate Pathing: The Importance of Being Active/Active

“Here is an example of path thrashing. If server A can reach a LUN only through one, and server B can reach the same LUN only through a different SP, they both continually cause the ownership of the LUN to move between the two SPs, ping-ponging the ownership of the LUN. Because the system moves the ownership quickly, the storage array cannot process any I/O or just a few. As a result, any servers that depend on the LUN will experience low throughput due to the long time it takes to complete each I/O request.”

We actually have the above mentioned situation. We have 2 servers Dell R610 ) wich are connected with an Dell MD3000 with SAS connection (single path).

Is it possible to avoid the problems as you described?

Allert Lageweg

Hi Allert,

If I’m not wrong, a single controller with two hosts, you must be a in a non-HA controller scenario.

Although it is a dual-port controller, still it’s a single controller, thus you don’t have the path thrashing issue.

Path thrashing is something you see in dual-controller arrays, either in single or dual-port controller.

Have a look at this PDF, especially chapter 5.1.3:

Click to access PowerVault_MD3000_and_MD3000i_Array_Tuning_Best_Practices.pdf

Rgds,

Many thanks for your reaction.

The situation is that we have an MD3000 with two singleport controllers. On both controllers there is an R610 Server connected. So Controller 0 ESXi server 1, and on controller 1 ESXi server 2.

The two hosts are contantly fighting for control of the Virtual Disks.

Actually i think this must be path trashing.

These events appear on both hosts:

“Frequent path state changes are occurring for path vmhba2:C0:T0:L3. This may indicate a storage problem. Affected device:”

Performance is so slow that is not workable.

Regards Allert

Hi Allert,

My fault, I did not catch you have 2 controllers on the array.

So yes your array has a path thrashing issue definitely.

Please make sure you follow VMware recommendation regarding your array, that is using VMW_PSP_MRU path policy: http://www.vmware.com/resources/compatibility/search.php?action=search&deviceCategory=san&productId=1&advancedORbasic=advanced&maxDisplayRows=50&key=MD3000&datePosted=-1&partnerId%5B%5D=-1&isSVA=0&rorre=0

I see two options:

1- Swap for two dual-port controllers in the array. In this configuration both ESXi hosts have a connection to each controller to avoid path thrashing now and in the future.

2- two datastores where LUN1 is owned by the controller1 connected to ESXi1 and and LUN2 is owned by controller2 connected to ESXi2. You make sure that all VMs running on ESXi1 are located on datastore LUN1 and all VMs running on ESXi2 are located on datastore LUN2. This will fix the path thrashing issue right away but forget about vMotion/DRS/HA as it will move around VMs on the two ESXi hosts increasing the risk where VMs will run on an ESXi that has not a direct access to the datatstore/LUN through the owning controller.

Hi Deino,

I find your answer very helpfull. I try the scenario as You described in option 2.

I contact Dell, they sold us this solution en never mentioned the path trashing issue. Maybe they swap the controllers for dual port 🙂

Greetings

Hi Allert, please let us know any progress on this matter as it can help other readers as well. Thx!

Pingback: It All Started With This Question… | DeinosCloud

Pingback: Welcome to vSphere-land! » Storage Links

Pingback: Changer le Multipathing PSP

Pingback: Updating Multipathing policy on PSP

Pingback: Nice article about VMWare PSP/SATP/MRU etc. « Marcel Braak

Great helpful article, thanks for this

Pingback: VMWARE TECHNICAL BEST PRACTICE DOCUMENT « Logeshkumar Marudhamuthu

Pingback: Pluggable Storage Architecture (PSA) | JoeDimitri

Hi I’m a little lost down here, trying to test the failover situations with a SAS array of tape drives, when I connect both drives, ESXi 5 detects the 2 “Serial Attached SCSI tape” and 1 “Serial Attached SCSI Medium Changer” but when I check the paths it only shows one path.

Do I missing some configuration?

A different thing happnes if I connect a Fiber Channel array of tape, ESXi 5 shows 4 paths since I have 2 ports of the HBA connected and 1 port of both drive connected to the zone. So having this config now I can perform the failovers without problems.

Pingback: vSphere Storage API Links » Welcome to vSphere-land!

Pingback: Perform command line configuration of multipathing options – Change a multipath policy | Ahmad Sabry ElGendi

Old but AWESOME post, my friend!

Chears,